Introduction

Artificial Intelligence, or AI, has been a part of modern-day life. AI has transformed the world as we know it, like in healthcare and education. As AI systems grow and develop, becoming more widespread and powerful, the ethical frameworks on which it has been built have not been a critical priority for industry leaders and policymakers. However, not every AI system is built the same. Some organizations have a different approach to ethics. This report will compare how ethical AI frameworks are for the top 3 biggest in the industry: OpenAI, Google Deepmind, and Deepseek by looking at and evaluating their approaches to AI safety, bias mitigation, transparency, and ethical governance, while also keeping in mind the cost-effectiveness and openness of the companies.

Purpose and Focus of the Report

The goal of this report is to identify which companies have the strongest commitment to ethical concerns while not compromising financial sustainability. OpenAI is probably the most popular and recognizable, while also emphasizing responsible AI usage. Google DeepMind focuses on long-term safety and its ability to interpret, while putting lots of research into it. DeepSeek is a rapidly emerging Chinese AI lab that prioritizes open-source development and low-cost accessibility.

This report will focus on the transparency, safety, and alignment with human values for the assessment. Transparency is how open a company is about communicating how an AI system is made and developed. Safety is what the company does to minimize any harmful outcomes, like implementing a fail-safe system or testing. The alignment with human values is how their AI platform respects human rights, where it has unintended harm and costs that are in the public interest. While other factors could possibly be relevant, these three provide a strong foundation for determining a platform’s responsible approach to AI development.

The Case for OpenAI

In a world that is constantly changing and where AI can impact society, it is essential to recommend a framework that is ethically practical and good for AI development.

OpenAI is the most established in terms of AI development, transparency, safety, and alignment with human values. It was the one that started the “AI Race” when it was launched back in 2022. Since then, the organization has implemented many different types of testing protocols so that it can prevent or minimize any potential risks. One example would be how “models handle controversial topics. Rather than defaulting to extreme caution, the spec encourages models to ‘seek the truth together’ with users while maintaining clear moral stances on issues like misinformation or potential harm.” (Robison) With this, it will reduce bias and provide more honest feedback from OpenAI’s models like ChatGPT. Moreover, their approach aligns with national efforts, as there was an executive order on the safe, secure, and trustworthy development and use of Artificial Intelligence that emphasizes the need for AI systems to be made ethically and tested for security and fairness. (White House) OpenAI’s models have undergone safety evaluations and have made commitments following the order.

In addition to safety, OpenAI invests heavily in transparency. Their public documentation of model behavior, training data sources, and risk factors is easily accessible as it allows for researchers, policymakers, or even the general public to understand how their models really work. Having this “openness ensures that AI operations are not only visible but also comprehensible and accountable, thereby enhancing trust and fostering collaboration in AI development and application.” (Solaiman) OpenAI balances innovation with ethical responsibility while being one of the most commercially successful.

The Case for Google DeepMind

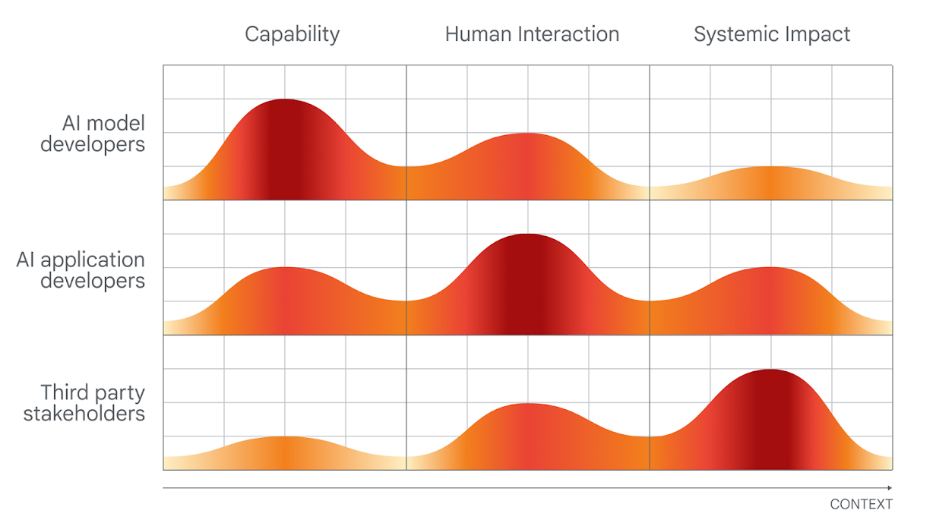

Google DeepMind is more focused on the long-term safety and interpretability. It has had past successes with AlphaGo, to now with its modern contributions to AI ethics. DeepMind is a leader in developing AI systems with human values and interests, so its alignment with human values is one of the best. One of the tests that they use is “integrating evaluations of a given risk of harm across these layers provides a comprehensive evaluation of the safety of an AI system.” (Issac) This approach makes sure that potential harms are not only identified at the model level, but also at the deployment and usage stage. DeepMind also believes that AI safety is a shared responsibility when making a model, where AI developers, application developers, and public stakeholders are evaluating and managing risks throughout the AI lifecycle. Developers are the key to understanding the assessment of a model’s capabilities, and public institutions and stakeholders are key to understanding the broader impacts of its impact and safe deployment.

The above shows the three layers of evaluation that DeepMind proposes for the framework, so rather than being neatly divided, none of them entirely holds the responsibility. The primary responsibility is to determine who is best placed to perform evaluations at each level. You could see from the diagram that depending on whether you’re a model developer, application developer, or stakeholder, your impact and role have different weights to what they affect in terms of capability, human interaction, and systemic impact.

The Case for DeepSeek

DeepSeek is an up-and-coming AI powerhouse from China. They are known for their open-source models and prioritizing them, as well as their low-cost accessibility. Unlike models like OpenAI and Deepmind, Deepseek aims to make AI better and available for people with fewer resources. The organization has been rapidly growing with the release of their model, as it is high-performing and completely open-source, which is unheard of. This means that it is free and affordable for the public, which means their approach fosters more innovation. While that may be the case, there are some concerns that the U.S. government has. One concern is “the potential security implications of DeepSeek. This comes amid news that the U.S. Navy has banned the use of DeepSeek among its ranks due to potential security and ethical concerns.”(Chow) as the U.S agency aims to “maximize the use of American-made AI.”(Wolfe). It is true that thy are more open, which can lead to innovation, but it can also come with risks if not properly regulated. This means that there is more of a lack of ethical guidelines and regulations for DeepSeek, which can lead to fear for the lack of accountability measures that the open-source AI can lead to when dealing with misuse and foreign surveillance.

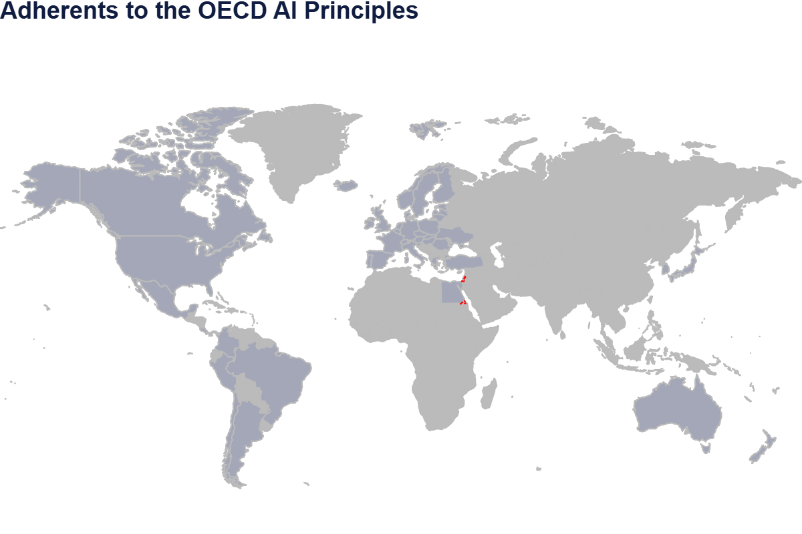

This organization is transparent in terms of sharing its model and data, but it does not fully extend to internal governance, testing procedures, or ethical oversight frameworks. One standard is the OECD AI Principles, which “promote the use of AI that is innovative and trustworthy”(OECD). Their principles focus on making sure that AI technologies are developed responsibly and benefit society as a whole. However, China is not among the countries that have adopted these principles, which raises red flags when it comes to AI development like DeepSeek. This makes sense because “overall, countries’ initiatives still retain predominantly ‘soft’ regulatory approaches for AI” (Lorenz). While it does not necessarily mean that they are developing responsibly or not, it just means that it could potentially lead to vulnerabilities like data security and misinformation, making it not ethically sound. Below are the countries that follow those principles, meaning they are committed to AI with high ethical standards for the benefit of all people.

Survey: Public Use and Concerns

To gather firsthand information on everyday users who have used AI tools or models, I conducted a brief primary source survey where I asked participants, “Which AI tools do you use, if any?” given the following choices of ChatGPT, DeepMind, DeepSeek, or none. The results were surprising as they revealed that OpenAI’s ChatGPT was by far the most recognized and most picked among participants. Another prompt was “Concerns for Ethical Use of AI?” and while responses did vary a bit, the result was that they were mainly concerned about data privacy and how accurate the information provided to them is. This showed the ethical implications of AI and how AI is still being used even if there is an underlying awareness of its ethicality. That’s why it is important for a company to prioritize a responsible approach to its AI development.

Evaluation and Recommendation

After evaluating OpenAI, Google DeepMind, and DeepSeek approaches on ethical governance, transparency, and accessibility, the most balanced and ethically sound would be ChatGPT by OpenAI, as it’s committed to responsible development and transparency through documentation, safety, and regulations. There’s a reason why it is the most popular and most highly regarded, as it is the most suitable model for ethically grounded AI development. It not only promotes trust but also shows that AI can be used for positive and truth-seeking purposes.

For organizations looking to make an AI system, OpenAI’s framework should serve as a template of sorts, as it provides a great example. However, DeepMind’s approach to shared responsibility and layered risk evaluation is also pretty good as well as it provides an innovative approach that can be incorporated. Like blending in OpenAI and transparency with DeepMind’s layered safety Assessment.

On the other hand, DeepSeek’s approach to an open-source model and low-cost factor is promising for innovation and accessibility, but the lack of recognition and commitments to ethical concerns, especially around governance and safety, is too much of a risk for it to be used widely.

In conclusion, as AI technology increases and becomes part of our daily lives, the need for ethical frameworks in development should be a critical priority. Through this analysis, not all AI organizations are made equally, as they do not all prioritize ethical standards. OpenAI stands out as it has the most popular and strongest ethical infrastructure and transparency. DeepMind, with its valuable innovations in shared responsibility, and DeepSeek, which demonstrates the growing power of accessibility, fostering innovation. Ultimately. Ensuring AI development is ethically sound requires more than just innovation, as it needs accountability and global collaboration while holding models to a high ethical standard. OpenAI was the clear winner as it can serve as the standard, ensuring that in the future that AI serves the public rather than harms it

Works Cited

Chow, Andrew R. “Why DeepSeek Is Sparking Debates Over National Security, Just Like TikTok.”

Time.Com, 6 Feb. 2025, time.com/7210875/deepseek-national-security-threat-tiktok/.

White House, “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence | The White House.” National Archives and Records Administration, National Archives and Records Administration, 30 Oct. 2023,

Robison, Kylie. “OpenAI Is Rethinking How AI Models Handle Controversial Topics.” The Verge, The Verge, 12 Feb. 2025, www.theverge.com/openai/611375/openai-chatgpt-model-spec-controversial-topics.

Issac, William. “Evaluating Social and Ethical Risks from Generative AI.” Google DeepMind, 19 Oct. 2023,